NN.DROPOUT

CLASStorch.nn.Dropout(p=0.5, inplace=False)

Parameters

- p (float) – probability of an element to be zeroed. Default: 0.5

inplace (bool) – If set to

True, will do this operation in-place. Default:FalseShape:

- Input: (∗)(∗). Input can be of any shape

- Output: (∗)(∗). Output is of the same shape as input

m = nn.Dropout(p=0.2) input = torch.randn(20, 16) output = m(input)如何判断当前是否为Train? 参数中无training 参数

==resolution:因为类的父类继承了module类,而module类种有self.training的定义,所以不需要再额外需要training参数了==

TORCH.NN.FUNCTIONAL.DROPOUT

torch.nn.functional.dropout(input, p=0.5, training=True, inplace=False)

Parameters

- p (float) – probability of an element to be zeroed. Default: 0.5

- training (bool) – apply dropout if is

True. Default:True - inplace (bool) – If set to

True, will do this operation in-place. Default:False

Return type

==函数无继承关系,所以需要额外的training参数,不过该函数还是底层,类内部也需要调用该参数,用的training参数为父父类中定义的==

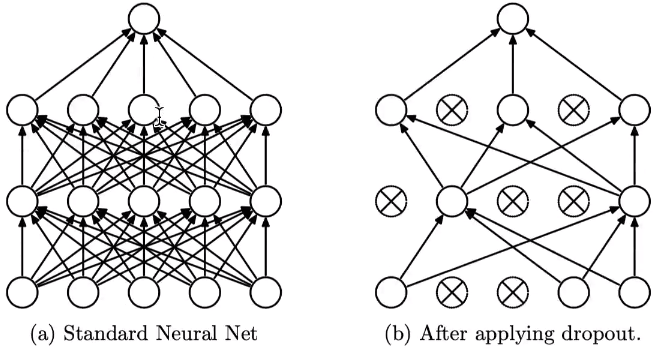

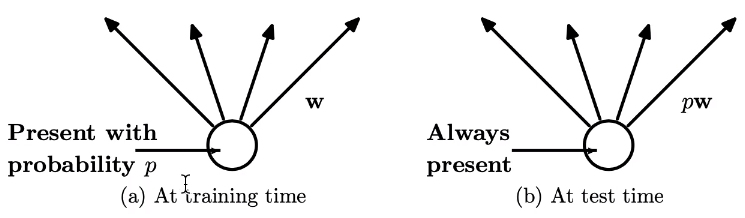

含dropout,训练时相当于集成学习,训练多个网络,而在测试时只是逼近

需要在测试期间与训练阶段的期望值相互逼近的话,需要在测试阶段将权重$w$乘以一个$p$。

因为在训练时按照$p$的概率保留了一些神经元的连接,所以在测试的时候需要在权重上乘以概率。

但是为了在测试时耗时尽可能少,所以在训练时的权重上乘了一个放缩因子(实际运行中)。

from .module import Module from .. import functional as F from torch import Tensor __all__ = ['Dropout', 'Dropout1d', 'Dropout2d', 'Dropout3d', 'AlphaDropout', 'FeatureAlphaDropout'] class _DropoutNd(Module): __constants__ = ['p', 'inplace'] p: float inplace: bool def __init__(self, p: float = 0.5, inplace: bool = False) -> None: super().__init__() if p < 0 or p > 1: raise ValueError(f"dropout probability has to be between 0 and 1, but got {p}") self.p = p self.inplace = inplace def extra_repr(self) -> str: return f'p={self.p}, inplace={self.inplace}' [docs]class Dropout(_DropoutNd): def forward(self, input: Tensor) -> Tensor: return F.dropout(input, self.p, self.training, self.inplace) [docs]class Dropout1d(_DropoutNd): # 仅有一个Tensor参数,无显式training参数 def forward(self, input: Tensor) -> Tensor: # 调用了函数 self.training哪来的?Class module中 return F.dropout1d(input, self.p, self.training, self.inplace) [docs]class Dropout2d(_DropoutNd): def forward(self, input: Tensor) -> Tensor: return F.dropout2d(input, self.p, self.training, self.inplace) [docs]class Dropout3d(_DropoutNd): def forward(self, input: Tensor) -> Tensor: return F.dropout3d(input, self.p, self.training, self.inplace) [docs]class AlphaDropout(_DropoutNd): def forward(self, input: Tensor) -> Tensor: return F.alpha_dropout(input, self.p, self.training) [docs]class FeatureAlphaDropout(_DropoutNd): def forward(self, input: Tensor) -> Tensor: return F.feature_alpha_dropout(input, self.p, self.training)... def train(self: T, mode: bool = True) -> T: if not isinstance(mode, bool): raise ValueError("training mode is expected to be boolean") self.training = mode for module in self.children(): module.train(mode) return self def eval(self: T) -> T: return self.train(False) ...

caffe2源码

GitHub地址:https://github.com/facebookarchive/caffe2

if (is_test_) { if (!IsInputOutputAlias(0, 0)) { context_.CopyFromCPU<float>( X.numel(), X.data<float>(), Y->template mutable_data<float>()); } return true; } else { // NOLINTNEXTLINE(cppcoreguidelines-narrowing-conversions,bugprone-narrowing-conversions) // 算一个放缩因子, ratio为drop的比例(为了使得测试时的时间尽可能少, 将运算放到训练阶段了) float scale = ratio_ >= 1.0 ? 0.0:1. / (1. - ratio_); // mask=true means keep, and mask=false means not keep, so we will // generate probability depending on 1-ratio. // 伯努利分布根据1 - ratio生成 at::bernoulli_distribution<double> dist(1. - ratio_); const float* Xdata = X.data<float>(); float* Ydata = Y->template mutable_data<float>(); auto mask = Output(1, X.sizes(), at::dtype<bool>()); bool* mask_data = mask->template mutable_data<bool>(); // 随机生成器生成一个张量 auto* gen = context_.RandGenerator(); for (int i = 0; i < X.numel(); ++i) { //大于0.5 则为1, 小于则为0 mask_data[i] = dist(gen) > 0.5; // NOLINTNEXTLINE(cppcoreguidelines-narrowing-conversions,bugprone-narrowing-conversions) Ydata[i] = Xdata[i] * scale * mask_data[i]; } return true; } }

numpy两种实现方式

import numpy as np

def train(ratio, x, w1, b1, w2, b2):

layer1 = np.maximum(0, np.dot(w1, x) + b1)

mask1 = np.random.binomial(1, 1-ratio, layer1.shape)

layer1 = layer1 * mask1

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

mask2 = np.random.binomial(1, 1-ratio, layer2.shape)

layer2 = layer2 * mask2

return layer2

def test(ratio, x, w1, b1, w2, b2):

layer1 = np.maximum(0, np.dot(w1, x) + b1)

layer1 = layer1 * (1-ratio)

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

layer2 = layer2 * (1-ratio)

return layer2import numpy as np

def train(ratio, x, w1, b1, w2, b2):

layer1 = np.maximum(0, np.dot(w1, x) + b1)

mask1 = np.random.binomial(1, 1-ratio, layer1.shape)

layer1 = layer1 * mask1

# 额外/(1-ratio)

layer1 = layer1/(1-ratio)

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

mask2 = np.random.binomial(1, 1-ratio, layer2.shape)

layer2 = layer2 * mask2

layer2 = layer2/(1-ratio)

return layer2

def test(x, w1, b1, w2, b2):

layer1 = np.maximum(0, np.dot(w1, x) + b1)

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

return layer2

还不快抢沙发