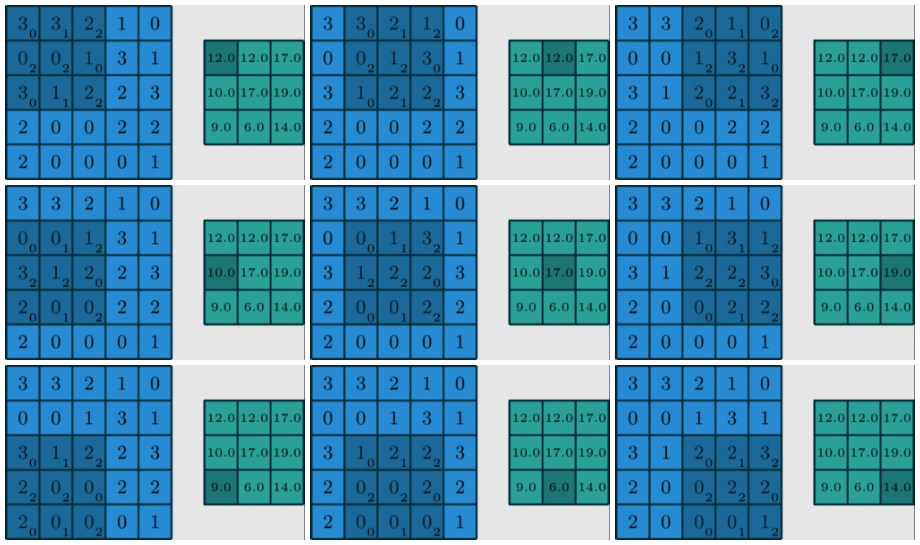

蓝色的input_feature 5*5

深蓝色小字部分kernel_size 3*3

绿色部分out_feature 3*3

stride = 1

padding = 0

channel = 1

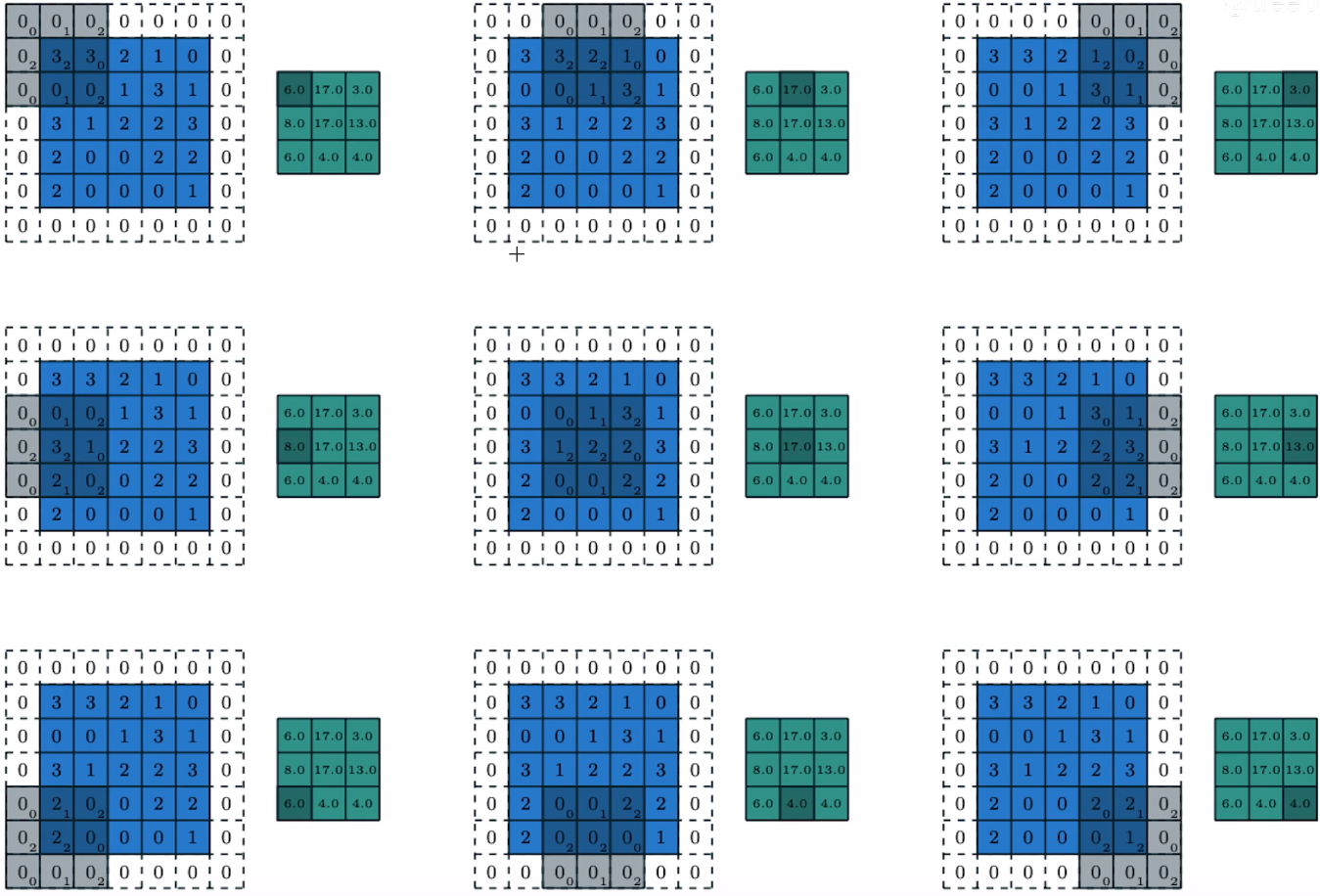

padding = 1

stride = 2

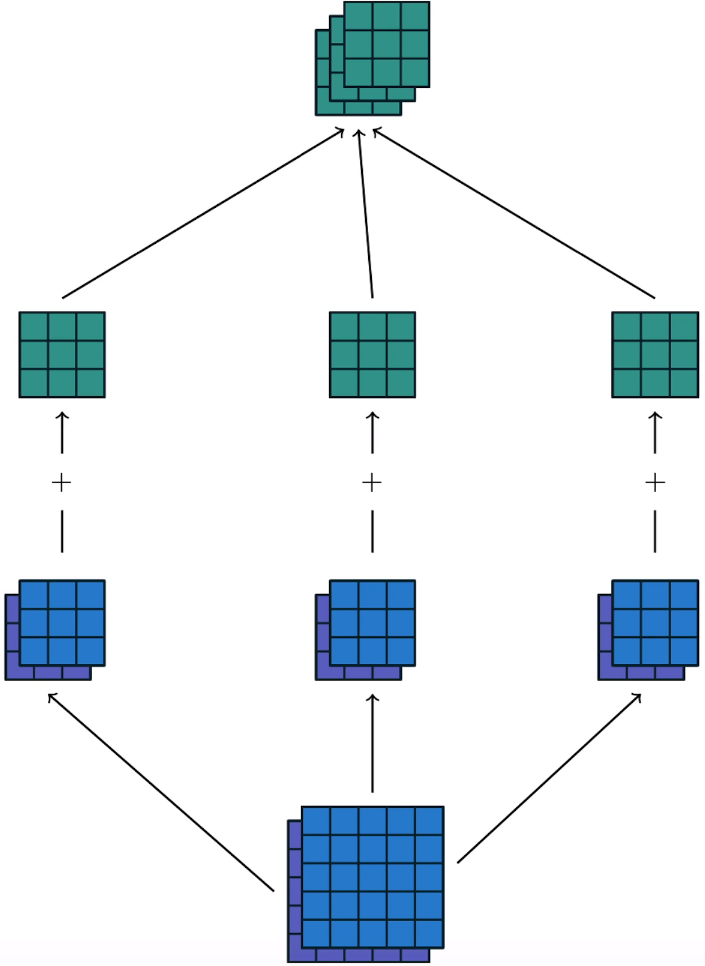

底部input_channels = 2

顶端绿色为out_channels = 3

kernels = 2*3 = 6(倒数第二行)

input = input_feature_map # 卷积输入特征图

kernel = conv_layer.weight.data # 卷积核

input = torch.randn(5, 5) # 卷积输入特征图

kernel = torch.randn(3, 3) # 卷积核

bias = torch.randn(1) # 卷积偏置项,默认输出通道数目=1

# Func1 用原始的矩阵运算来实现二维卷积,先不考虑batch_size和channel维度

def matrix_mutiplication_for_conv2d(input, kernel, bias=0, stride=1, padding=0):

if padding > 0:

input = F.pad(input, (padding, padding, padding, padding)) # 左右上下都pad0

input_h, input_w = input.shape

kernel_h, kernel_w = kernel.shape

output_w = (math.floor((input_w - kernel_w) / stride) + 1) # 卷积输出的宽度

output_h = (math.floor((input_h - kernel_h) / stride) + 1) # 卷积输出的高度

output = torch.zeros(output_h, output_w) # 初始化输出矩阵

for i in range(0, input_h - kernel_h + 1, stride): # 对高度维度进行遍历

for j in range(0, input_w - kernel_w + 1, stride): # 对宽度维度进行遍历

region = input[i:i+kernel_h, j:j+kernel_w] # 取出被核滑动到的区域

output[int(i / stride), int(j / stride)] = torch.sum(region * kernel) + bias # 点乘,并赋值给输出

return output

# 矩阵运算实现卷积的结果

mat_mul_conv_output = matrix_mutiplication_for_conv2d(input, kernel, bias=bias, padding=1)

print(mat_mul_conv_output.shape, "\n", mat_mul_conv_output)

# 调用PyTorch API卷积的结果

pytorch_api_conv_output = F.conv2d(input.reshape((1,1,input.shape[0], input.shape[1])), \

kernel.reshape((1,1,kernel.shape[0], kernel.shape[1])),\

padding=1,\

bias=bias)

print(pytorch_api_conv_output.squeeze(0).squeeze(0).shape, "\n", pytorch_api_conv_output.squeeze(0).squeeze(0)) # 验证成功,举证乘法实现的卷积欲pytorch api的结果一致(通道数为1)

'''

torch.Size([5, 5])

tensor([[ 0.0254, 2.5571, 0.8080, -2.0241, 3.9600],

[ 1.6969, 5.7820, -1.8596, 2.6106, 7.2310],

[ 2.8070, 4.2363, -3.0085, 6.3041, 1.2186],

[ 5.0747, -1.0790, 0.8183, 3.5965, -2.4651],

[ 4.1119, -1.5446, 2.8745, 0.9343, 1.6732]])

torch.Size([5, 5])

tensor([[ 0.0254, 2.5571, 0.8080, -2.0241, 3.9600],

[ 1.6969, 5.7820, -1.8596, 2.6106, 7.2310],

[ 2.8070, 4.2363, -3.0085, 6.3041, 1.2186],

[ 5.0747, -1.0790, 0.8183, 3.5965, -2.4651],

[ 4.1119, -1.5446, 2.8745, 0.9343, 1.6732]])

'''# Func3 用原始的矩阵运算来实现二维卷积,考虑batch_size和channel维度

# bias与out_channel的形状一致

def matrix_mutiplication_for_conv2d_full(input, kernel, bias=0, padding=0, stride=2):

# input kernel都是4维张量

if padding > 0:

input = F.pad(input, (padding, padding, padding, padding, 0, 0, 0, 0)) # 左右上下都pad0,channel和batch_size维度不填充

bs, in_channel, input_h, input_w = input.shape

out_channel, in_channel, kernel_h, kernel_w = kernel.shape

if bias is None:

bias = torch.zeros(out_channel)

output_w = (math.floor((input_w - kernel_w) / stride) + 1) # 卷积输出的宽度

output_h = (math.floor((input_h - kernel_h) / stride) + 1) # 卷积输出的高度

output = torch.zeros(bs, out_channel, output_h, output_w) # 初始化输出矩阵

for index in range(bs): # 对batch_size维度考虑(样本层)

for oc in range(out_channel): # 对每个输入通道维度合并,作为输出通道维度 eg.每个输出通道都由输入通道的求和得到(输出通道层)

for ic in range(in_channel): # 对输入通道维遍历(输入通道层) **每次对输入通道的特征图卷积后加到输出通道**

for i in range(0, input_h - kernel_h + 1, stride): # 对高度维度进行遍历(高度)

for j in range(0, input_w - kernel_w + 1, stride): # 对宽度维度进行遍历(宽度)

region = input[index, ic, i:i+kernel_h, j:j+kernel_w] # 取出被核滑动到的区域

output[index, oc, int(i / stride), int(j / stride)] += torch.sum(region * kernel[oc, ic]) # 点乘,并赋值给输出

output[index, oc] += bias[oc] # 在每个输出batch_size维度的输出通道上添加偏置项

return output

input = torch.randn(2, 2, 5, 5) # bs*in_channel*in_h*in_w

kernel = torch.randn(3, 2, 3, 3) # out_channel*in_channel*kernel_h*kernel_w

bias = torch.randn(3)

pytorch_conv2d = F.conv2d(input, kernel, bias=bias, padding=1, stride=2)

mm_conv2d_full_output = matrix_mutiplication_for_conv2d_full(input, kernel, bias=bias, padding=1, stride=2)

print(pytorch_conv2d.shape, "\n", pytorch_conv2d)

print(mm_conv2d_full_output.shape, "\n", mm_conv2d_full_output)

print(torch.allclose(pytorch_conv2d, mm_conv2d_full_output))

'''

torch.Size([2, 3, 3, 3])

tensor([[[[ 1.1374, -0.0861, 0.4275],

[ 3.6559, 2.5741, 1.3991],

[ 0.9067, 9.3524, 1.1320]],

[[ 0.8325, -1.5813, -2.5399],

[ 0.8679, -8.0568, -4.0715],

[-0.2598, -3.6637, -8.9900]],

[[-4.8694, -3.0817, -1.4585],

[-7.6027, 1.3716, -0.4906],

[-1.1015, -7.1992, 0.9741]]],

[[[-2.9367, -5.3354, -2.1468],

[ 0.8508, 4.3884, -3.4202],

[ 0.2540, -2.2766, 1.8590]],

[[ 1.4410, 1.1040, -0.2459],

[-5.6472, -0.8350, -3.9254],

[ 0.5086, -3.6675, 1.0906]],

[[-5.2750, -7.2560, -0.5371],

[-3.2578, 0.9768, 4.3391],

[-3.8552, 0.6670, -2.7320]]]])

torch.Size([2, 3, 3, 3])

tensor([[[[ 1.1374, -0.0861, 0.4275],

[ 3.6559, 2.5741, 1.3991],

[ 0.9067, 9.3524, 1.1320]],

[[ 0.8325, -1.5813, -2.5399],

[ 0.8679, -8.0568, -4.0715],

[-0.2598, -3.6637, -8.9900]],

[[-4.8694, -3.0817, -1.4585],

[-7.6027, 1.3716, -0.4906],

[-1.1015, -7.1992, 0.9741]]],

[[[-2.9367, -5.3354, -2.1468],

[ 0.8508, 4.3884, -3.4202],

[ 0.2540, -2.2766, 1.8590]],

[[ 1.4410, 1.1040, -0.2459],

[-5.6472, -0.8350, -3.9254],

[ 0.5086, -3.6675, 1.0906]],

[[-5.2750, -7.2560, -0.5371],

[-3.2578, 0.9768, 4.3391],

[-3.8552, 0.6670, -2.7320]]]])

True

'''

还不快抢沙发