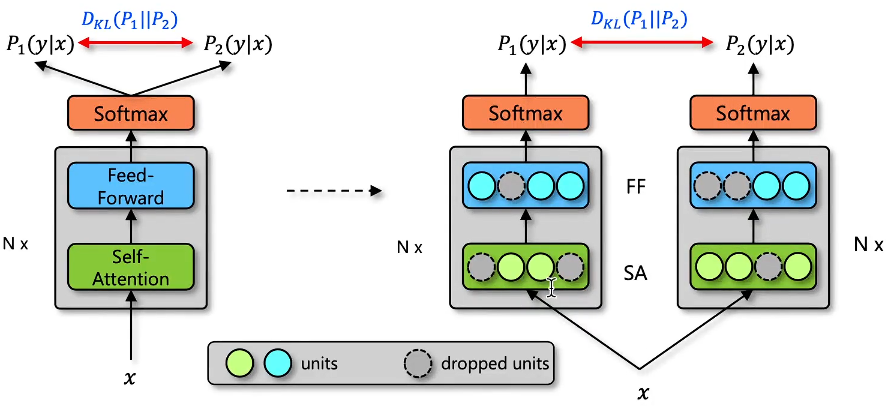

R-Drop: Regularized Dropout for Neural Networks

Dropout is a powerful and widely used technique to regularize the training of deep neural networks.

Dropout在训练和推理时存在不一致的问题(集成学习)R 对每个子模型的分布做一个KL散度

import numpy as np

def train_r_drop(ratio, x, w1, b1, w2, b2):

# 输入复制一份

x = torch.cat([x, x], dim=0)

layer1 = np.maximum(0, np.dot(w1, x) + b1)

mask1 = np.random.binomial(1, 1-ratio, layer1.shape)

layer1 = layer1 * mask1

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

mask2 = np.random.binomial(1, 1-ratio, layer2.shape)

layer2 = layer2 * mask2

logits = func(layer2)

logits1, logits2 = logits[:bs, :], logits[bs:, :]

nll1 = nll(logits1, label)

nll2 = nll(logits2, label)

kl_los = kl(logits1, logits2)

loss = nll1 + nll2 + kl_loss

return loss

def test(ratio, x, w1, b1, w2, b2):

layer1 = np.maximum(0, np.dot(w1, x) + b1)

layer1 = layer1 * (1-ratio)

layer2 = np.maximum(0, np.dot(w2, layer1) + b2)

layer2 = layer2 * (1-ratio)

return layer2import torch

conv_layer = torch.nn.Conv2d(in_channels=2, out_channels=2, kernel_size=3, padding="same")

for i in conv_layer.named_parameters():

print(i)

'''

('weight', Parameter containing:

tensor([[[[-0.1885, 0.1133, -0.0103],

[-0.0462, -0.1434, -0.0569],

[ 0.1969, 0.0827, -0.1245]],

[[ 0.1554, 0.1459, 0.0405],

[-0.0806, 0.1438, -0.1319],

[-0.1495, -0.0960, 0.2193]]],

[[[-0.1748, 0.1517, 0.0040],

[ 0.0097, 0.1565, 0.1850],

[-0.0261, 0.1298, 0.0211]],

[[-0.0964, -0.0133, -0.0378],

[ 0.0617, -0.2037, 0.0011],

[-0.0542, -0.1499, 0.0187]]]], requires_grad=True))

('bias', Parameter containing:

tensor([0.0560, 0.2200], requires_grad=True))

'''

conv_layer.weights.size()

'''

对于输出的第1个通道而言:

对于输入的2个通道都有3×3的卷积核分别在这2个输入通道上做卷积再求和,就得到了,第1个输出通道的特征值。

对于输出的第2个通道而言:

又来了2个卷积核,对输入的2个通道做卷积,得到第2个输出通道的特征值。

torch.Size([2, 2, 3, 3]) 3,3为Kernel_size大小 2,2 是输出×输入

'''

conv_layer.bias.size()

'''

torch.Size([2]) 和输出通道一致

'''

conv_layer = torch.nn.Conv2d(in_channels=2, out_channels=4, kernel_size=3, padding="same")

print(conv_layer.weight.size())

'''

4中每个输出通道,都有2套3×3的矩阵对输入进行滑动卷积,再将2个通道的值加起来再加上bias就构成输出通道的特征值

torch.Size([4, 2, 3, 3])

'''Conv2d

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

stridecontrols the stride for the cross-correlation, a single number or a tuple.paddingcontrols the amount of padding applied to the input. It can be either a string {‘valid’, ‘same’} or an int / a tuple of ints giving the amount of implicit padding applied on both sides.dilationcontrols the spacing between the kernel points; also known as the à trous algorithm. It is harder to describe, but this link has a nice visualization of whatdilationdoes.groupscontrols the connections between inputs and outputs.in_channelsandout_channelsmust both be divisible bygroups. For example,- At groups=1, all inputs are convolved to all outputs.

- At groups=2, the operation becomes equivalent to having two conv layers side by side, each seeing half the input channels and producing half the output channels, and both subsequently concatenated.

- At groups=

in_channels, each input channel is convolved with its own set of filters (of size out_channelsin_channelsin_channelsout_channels).

The parameters

kernel_size,stride,padding,dilationcan either be:- a single

int– in which case the same value is used for the height and width dimension - a

tupleof two ints – in which case, the first int is used for the height dimension, and the second int for the width dimension

深度和可分离卷积

groups> 1;且能够被input_channels和out_channels整除同时将两者分为好多组,单独做卷积。

好处:

1、并没有将通道完全的

mix,假设有8个通道,分为2组,前4个通道后4个通道分别做一个卷积组2、计算量下降

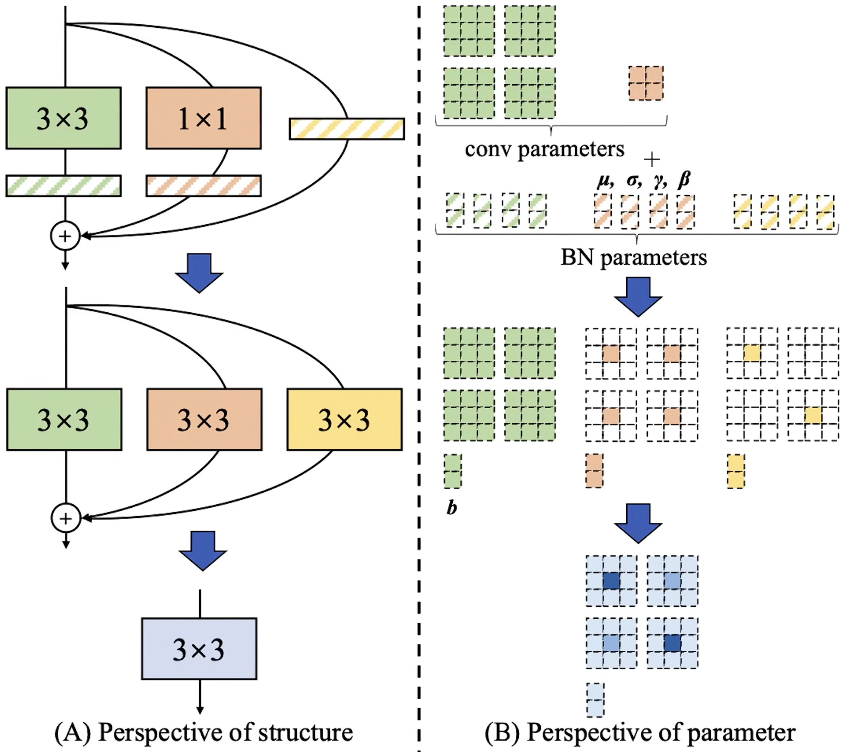

resnet的一个变体网络 RepConv

point-wise convolution

1×1的卷积

卷积基本假设:局部关联性、平移不变性

不考虑周围点,仅考虑自身:只是将每个通道上的值进行加权求和

channels mix

depth-wise convolution

groups > 1不考虑对所有channels进行mix,而是划分部分miximport torch # 分为2部分:1、输入通道为1,输出通道为2的卷积;2、也是输入通道为1,输出通道为2的卷积,最后再拼起来 计算量下降 conv_layer = torch.nn.Conv2d(in_channels=2, out_channels=4, kernel_size=3, padding="same", groups=2) print(conv_layer.weight.size()) print(conv_layer.bias.size()) ''' torch.Size([4, 1, 3, 3]) torch.Size([4]) ''' conv_layer1 = torch.nn.Conv2d(in_channels=1, out_channels=2, kernel_size=3, padding="same") conv_layer2 = torch.nn.Conv2d(in_channels=1, out_channels=2, kernel_size=3, padding="same") print(conv_layer1.weight.size()) print(conv_layer2.weight.size()) print(conv_layer1.bias.size()) print(conv_layer2.bias.size()) ''' torch.Size([2, 1, 3, 3]) torch.Size([2, 1, 3, 3]) torch.Size([2]) torch.Size([2]) '''如何将

3×3卷积、1×1、x融合为1个3×3卷积?

import torch import torch.nn as nn import torch.nn.functional as F in_channels = 2 out_channels = 2 kernel_size = 3 w = 9 h = 9 # res_block = 3 * 3 conv + 1 * 1 conv + input # 定义输入图片大小 x = torch.ones(1, in_channels, w, h) # 方法1:原生写法 # 输入与输出通道一致 3 * 3 conv 要做padding conv_2d = nn.Conv2d(in_channels, out_channels, kernel_size, padding="same") # 定义1 * 1 conv只考虑像素点的通道而不考虑周围点的关联性 不需要padding conv_2d_pointwise = nn.Conv2d(in_channels, out_channels, kernel_size=1, padding="same") result1 = conv_2d(x) + conv_2d_pointwise(x) + x # print(result1) # 方法2:算子融合 RepConv # 1) 改造 # 将point-wise卷积和x本身都写为3*3的卷积 # 最终把3个卷积融合为1个卷积 # 扩充point-wise卷积核的weight 1*1 => 3*3 # 1 * 1 => 3 * 3 weight:2(O) 2(I) 1(k) 1(k) => 2(O) 2(I) 3(K1) 3(K2) # 上下、左右各pad 0 pad为从内到外pad 先pad K2上下、左右再pad K1上下、左右 O与I上下、左右不pad pointwise_to_conv_weight = F.pad(conv_2d_pointwise.weight, pad=[1, 1, 1, 1, 0, 0, 0, 0]) conv_2d_for_pointwise = nn.Conv2d(in_channels, out_channels, kernel_size, padding="same") conv_2d_for_pointwise.weight = nn.Parameter(pointwise_to_conv_weight) conv_2d_for_pointwise.bias = conv_2d_pointwise.bias # 将x本身变为1个3*3卷积 x经过卷积后仍为x # 不考虑相邻点融合=>`point-wise convolution` # 不考虑通道间融合=>`depth-wise convolution` # weight:2*2*3*3 # 第1个3*3中间为1, 其余部分为0, 第2个3*3全0; # 第4个3*3中间为1, 其余部分为0, 第3个3*3全0; # 不考虑相邻通道 zeros = torch.unsqueeze(torch.zeros(kernel_size, kernel_size), 0) # 不考虑相邻点 stars = torch.unsqueeze(F.pad(torch.ones(1, 1), pad=[1, 1, 1, 1]), 0) # 第1个channel stars_zeros = torch.unsqueeze(torch.cat([stars, zeros], 0), 0) # 第2个channel zeros_stars = torch.unsqueeze(torch.cat([zeros, stars], 0), 0) identity_to_conv_weight = torch.cat([stars_zeros, zeros_stars], 0) identity_to_conv_bias = torch.zeros([out_channels]) conv_2d_for_identity = nn.Conv2d(in_channels, out_channels, kernel_size, padding="same") conv_2d_for_identity.weight = nn.Parameter(identity_to_conv_weight) conv_2d_for_identity.bias = nn.Parameter(identity_to_conv_bias) result2 = conv_2d(x) + conv_2d_for_pointwise(x) + conv_2d_for_identity(x) # print(result2) print(torch.all(torch.isclose(result1, result2))) # 目前将1*1和x本身都改为3*3 # 2) 融合 conv_2d_for_fussion = nn.Conv2d(in_channels, out_channels, kernel_size, padding="same") conv_2d_for_fussion.weight = nn.Parameter(conv_2d.weight.data + conv_2d_for_pointwise.weight.data + conv_2d_for_identity.weight.data) conv_2d_for_fussion.bias = nn.Parameter(conv_2d.bias.data + conv_2d_for_pointwise.bias.data + conv_2d_for_identity.bias.data) result3 = conv_2d_for_fussion(x) print(torch.all(torch.isclose(result2, result3))) ''' tensor(True) tensor(True) '''

还不快抢沙发